Large, online services like Netflix and Amazon have been doing it for years: Chaos Engineering, testing bottlenecks and single point of failures in production using chaos. This can be done in many different ways: from physically disconnecting cables to inserting random behavior into the code.

Of course, the extent to which you apply this depends on many factors and has to do, among other things, with the consequences of downtime, or only partially working applications. Is it about financial risks, immediate life-threatening situations, brand damage or is it acceptable to be unavailable for a while? The greater the risks, the better applications and environments must be tested and secured.

This is a complex issue but even for the smaller players, it is important to explore the possibilities and see if, on a smaller scale or not, Chaos Engineering can contribute to a better service.

One of these possibilities is using Simmy and Polly in .Net applications. Both Polly and Simmy are part of the The Polly Project.

POLLY

Using Polly, it is possible to apply complex rules (policies) that allow parts of the code to be re-executed when errors occur.

EXAMPLE: RENEW AUTHENTICATION

Case in point: for the mobile application of a major car brand, I once had to develop a new screen to show the status of the vehicle: is the tire pressure in order, are the doors locked, does an appointment need to be made for a service? The data for this had to be extracted from an external application that used dual security, general security for the API and personal security for the car’s data. When calling the API, one or even both of the two “tokens” could have expired and needed to be renewed. A complex scenario for which Polly really only needs two or three lines:

Two errors are handled in the above code: an ‘UnauthorizedAccessException‘ and a ‘TokenExpiredException‘. For each of the two errors, before data is retrieved again, a line of code is first executed to fix the problem. The two rules are merged so that both rules apply to the call.

EVEN MORE COMPLEX: CIRCUIT BREAKER, RETRY AND FALLBACK

Rerunning code should be well thought out. Is it possible to infer on the basis of errors that an error is unresolvable for the time being, for example? Then re-running is of no use at this time. If an (external) service indicates that it is very busy, re-calling may even cause the service to not get out of trouble at all.

There are ways to deal with this with Polly as well. In addition to various options for retrying scenarios(retries), it is also possible to define alternative scenarios(fallbacks) or to temporarily make retrying impossible(circuit-breaker).

Is there a service that cannot write data away to a database due to busyness? Alternatively, you can temporarily write the data to a text file or put it in a queue.

Does a service indicate that too many calls have been made within a certain period of time? Temporarily disconnect the circuit and require the application to wait until the circuit reopens.

Even a combination of these need not look very complex in code:

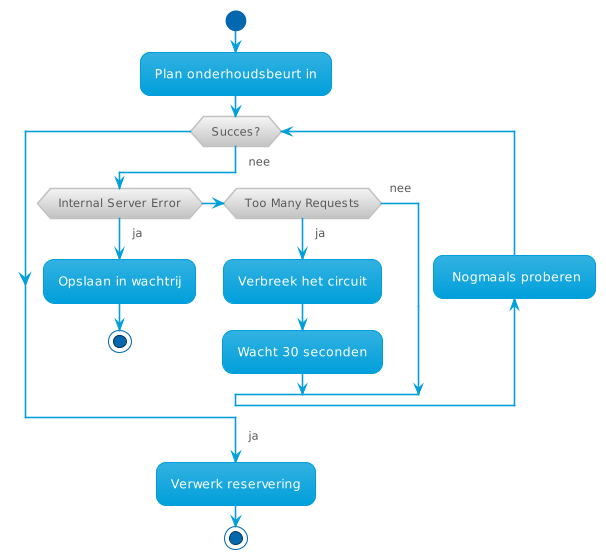

Schematically, this looks something like the diagram below:

RESIST

Providing services and applications with rules to choose from different scenarios when problems arise makes for better software that can resist different problems, in English: resilience.

SIMMY

When thinking out possible scenarios, it will probably quickly become apparent that you can think about it all you want, but that in practice just that one scenario will come along that you didn’t think of. Perhaps because you know how the application works and are therefore subconsciously influenced or simply because there are many complex scenarios to consider. To avoid this and better test the application, it is possible to inject Chaos. There is then often talk of Chaos Monkeys: you let them loose in your application but you have no idea what they are going to figure out.

FOUR FORMS OF CHAOS

Simmy is actually a descendant of Polly and has the same setup: create complex rules that you can combine or not.

There are currently 4 forms of chaos:

- errors(exceptions): instead of successful processing, an error is regularly returned;

- result: instead of the expected, correct result, something completely different is returned;

- delay(latency): by building in different delays, you can test how systems handle slow connections to external services or databases;

- behavior: this provides the ability to build additional behaviors into the application. In an example from Simmy’s documentation, they even discard database tables before a call can be processed in order to mimic extreme situations.

For each form of chaos, you can indicate whether a particular rule is on and at what percentage of the rule should be applied.

The code below is an example of ‘exception’ chaos: for 50% of all requests for related products, an ‘InvalidDataException’ is returned:

WHAT TO DO IN THE EVENT OF CHAOS

Injecting chaos, of course, is not the goal in itself. The goal is to be able to resist (un)anticipated problems. A couple of common examples: if you are shopping at Amazon and the alternate offer service has a glitch, it is still possible to place an order. You may not see alternatives, or some regular, well-run products. Chances are you won’t even notice. With Netflix, you may well be temporarily unable to see the latest releases or top 10 while still being able to watch your favorite series. In both cases, the complete service is not dropped and even the most important functionality continues to work. This will not always be possible, but by thinking carefully, testing and developing alternatives, you are constantly working to improve services.

CONFIGURING RULES

Turning on and off a Chaos can be done in several ways: in the application’s code, in configuration files, remotely using external web services or even real-time with Azure App Configuration. The options are too diverse to discuss them all here, check the source references, the sample project provided or Simmy’ s documentation for all possible options. It is especially important to think carefully about turning Chaos Monkeys on and off. Are you testing in production? Then you probably want to be able to intervene immediately if things go wrong. In other environments, on the contrary, it may be important to be able to determine the degree of chaos so that manual testing can quickly reveal the consequences of the chaos.

TESTING

How do you actually test that, chaos? Fixed paths and roadmaps will probably not help because you never know whether or not there was chaos during testing. Measuring is knowing, especially when it comes to chaos in production. Does the number of orders decrease significantly? Are predefined boundaries crossed? Is the number of errors recorded increasing? All tools that can help determine whether everything continues to work as it should. Chances are, many of these tools are already relevant to new releases and management of current software.

If you can turn rules on and off yourself, it is also possible to decide when it is a good time to introduce chaos. When it is quiet, or just when it is busy, after new releases or maybe even randomly, pre-announced or without teams being aware.

FINALLY

When reading into the matter you can define four phases of cloud adoption. My first introduction was this cool graph:

It is possible not only for the big guys but also for a smaller audience to ensure better service using Chaos Engineering. Obviously , it will be a complex and potentially costly choice in which balancing costs and benefits is very important. It also requires an adjustment in our way of thinking and working, especially if you are going to bring chaos into your production environment. Hopefully this article has at least helped you think about it and you now have a little more insight into Chaos Engineering. I would love to hear if you have any questions or comments and look forward to seeing if you will do anything with the knowledge gained!

SOURCES

When reading into the matter you can define four phases of cloud adoption. My first introduction was this cool graph:

- SDN recording

- Polly

- Simmy

- What is Simmy?

- Simmy and Azure App Configuration

- Kolton Andrus on Lessons Learnt From Failure Testing at Amazon and Netflix and New Venture Gremlin, InfoQ Podcast

- Simmy and Chaos Engineering Geovanny Alzate Sandoval, Adventures In .Net Podcast

- Chaos Engineering with Charles Torre, MS Dev Show Podcast

- Netflix Chaos Engineering

- Netflix Chaos Monkeys

- Project Waterbear (LinkedIn)

- Polly Sample Project (Github)

- Simmy Sample Project (Github)

Summary